100 Post Training Survey Questions

A Global Employee Recognition and Wellness Platform

Training doesn’t end when the session closes. The real question is: did it work? A training effectiveness survey helps you answer that by capturing feedback directly from learners. Done well, it shows whether your training landed, what should be improved before the next round, and which elements are worth repeating.

The urgency is real. Employers projected that 44% of workers’ skills would be disrupted within five years, according to the World Economic Forum. Strong training helps people adapt, stay engaged, and stick with your organization.

In this blog, you’ll learn what a training effectiveness survey is, when to use it, and how to design one that gives you clear, actionable insights. You’ll also find 100 ready-to-use questions organized by theme with suggested answer types, along with research-backed benefits you can share with leadership. To wrap up, we’ll cover best practices for keeping surveys short, focused, and valuable for both learners and decision-makers.

“The growth and development of people is the highest calling of leadership.”

– Harvey S. Firestone

Key Takeaways

- What is a Training Effectiveness Survey?

- 100 Training Effectiveness Survey Questions

- Benefits of running a Training Effectiveness Survey Questions

- Best Practices

- Conclusion

What is a Training Effectiveness Survey?

A training effectiveness survey is a structured feedback tool used to understand whether a learning program achieved its goals. It goes beyond just asking if people “liked” the training—it helps you measure whether the content was relevant, the delivery was clear, and whether learners feel confident applying what they learned on the job.

One of the most widely referenced frameworks here is the Kirkpatrick Model of Training Evaluation, created by Donald Kirkpatrick. It organizes evaluation into four levels:

-

Reaction – Did participants find the training engaging, useful, and worth their time?

-

Learning – Did they actually acquire the intended knowledge, skills, or attitudes?

-

Behavior – Are they using those skills in their day-to-day work?

-

Results – Did the training contribute to measurable business outcomes such as productivity, quality, safety, or customer satisfaction?

Post-training surveys usually focus on the first two levels—Reaction and Learning—but they also set the stage for measuring Behavior and Results through follow-ups, manager feedback, and performance data. This makes them a critical starting point for proving ROI and continuously improving your training programs.

100 Training Effectiveness Survey Questions

Below is a categorized list of 100 copy-ready questions you can adapt. Each includes a recommended format, Likert (5-point agreement), multiple-choice, checkbox, yes/no, or open-ended, so you can drop them straight into your next survey.

1. Overall Experience (10 questions)

-

The training met my expectations. (Likert)

-

I found the training relevant to my role. (Likert)

-

The length of the training was appropriate. (Likert)

-

I was able to stay engaged throughout the session. (Likert)

-

The training was well-organized. (Likert)

-

Would you recommend this training to a colleague? (Yes/No)

-

How would you rate your overall satisfaction with this training? (Multiple-choice: Excellent / Good / Fair / Poor)

-

The session was worth the time I invested. (Likert)

-

What did you like most about this training? (Open-ended)

-

What could be improved for future sessions? (Open-ended)

2. Learning Objectives (10 questions)

-

The learning objectives were clearly stated. (Likert)

-

The training objectives were met. (Likert)

-

I understood how the content connected to the objectives. (Likert)

-

The training addressed the skills I need in my job. (Likert)

-

The learning objectives matched the pre-training communication. (Likert)

-

Which objectives were most valuable to you? (Open-ended)

-

Were there any objectives you felt were missing? (Open-ended)

-

How confident are you in applying what you learned? (Multiple-choice: Very confident → Not at all confident)

-

The objectives were realistic and achievable. (Likert)

-

What additional skills would you like to see covered in future training? (Open-ended)

3. Content Quality (15 questions)

-

The training materials were clear and easy to understand. (Likert)

-

The content matched my expectations. (Likert)

-

The examples provided were relevant to my work. (Likert)

-

The balance between theory and practice was appropriate. (Likert)

-

The case studies or examples helped me learn. (Likert)

-

How well did the content match your skill level? (Multiple-choice)

-

The content was up to date. (Likert)

-

The amount of information presented was manageable. (Likert)

-

The content avoided unnecessary jargon. (Likert)

-

How would you rate the usefulness of the content overall? (Multiple-choice)

-

The training deepened my knowledge of the topic. (Likert)

-

The training addressed real-world challenges. (Likert)

-

Which content areas were most helpful to you? (Open-ended)

-

Which content areas were least helpful? (Open-ended)

-

What additional content should be included? (Open-ended)

4. Trainer/Facilitator (10 questions)

-

The trainer explained concepts clearly. (Likert)

-

The trainer was knowledgeable about the subject. (Likert)

-

The trainer encouraged participation. (Likert)

-

The trainer handled questions effectively. (Likert)

-

The trainer kept the session engaging. (Likert)

-

How approachable was the trainer? (Multiple-choice)

-

The trainer used real-world examples effectively. (Likert)

-

The trainer managed time well. (Likert)

-

The trainer created an inclusive learning environment. (Likert)

-

Any feedback for the trainer? (Open-ended)

5. Delivery & Format (15 questions)

-

The training platform or room was easy to navigate. (Likert)

-

The pace of delivery was appropriate. (Likert)

-

The session format (online/in-person) worked well. (Likert)

-

Technical issues were minimal. (Likert)

-

The training allowed for interaction and discussion. (Likert)

-

The visuals (slides, handouts) were clear. (Likert)

-

The audio quality was clear. (Likert)

-

Breaks were well-timed. (Likert)

-

Which delivery method did you prefer? (Multiple-choice)

-

How comfortable were you using the platform? (Likert)

-

The delivery supported different learning styles. (Likert)

-

The training was accessible (captions, transcripts, etc.). (Likert)

-

What challenges did you face during delivery? (Open-ended)

-

How could the delivery be improved? (Open-ended)

-

Would you prefer a different delivery method in the future? (Multiple-choice)

6. Application & Impact (20 questions)

-

I can apply what I learned immediately. (Likert)

-

The training will help me perform better at work. (Likert)

-

The training improved my confidence in the topic. (Likert)

-

The training will positively impact my team. (Likert)

-

The training supports my career development. (Likert)

-

The training addressed real challenges I face at work. (Likert)

-

I can already identify ways to use the training. (Likert)

-

This training will help reduce errors or mistakes. (Likert)

-

This training will improve customer outcomes. (Likert)

-

How likely are you to use the new skills within 30 days? (Multiple-choice)

-

The training filled a critical knowledge gap. (Likert)

-

The training reinforced skills I already had. (Likert)

-

What specific tasks will you apply this training to? (Open-ended)

-

What challenges might stop you from applying it? (Open-ended)

-

How can the company support you in applying it? (Open-ended)

-

Did you discuss applying this training with your manager? (Yes/No)

-

Do you need follow-up support? (Yes/No)

-

What kind of support would help you apply the training? (Open-ended)

-

Do you see this training affecting your long-term growth? (Yes/No/Unsure)

-

The training will contribute to organizational goals. (Likert)

7. Accessibility & Inclusion (10 questions)

-

The training was accessible to all participants. (Likert)

-

I was able to fully participate without barriers. (Likert)

-

The training considered diverse perspectives. (Likert)

-

Accessibility features (captions, transcripts) were helpful. (Likert)

-

The training environment was inclusive. (Likert)

-

Were you able to request accommodations if needed? (Yes/No)

-

The materials were easy to read and understand. (Likert)

-

I felt respected during the session. (Likert)

-

What could make the training more inclusive? (Open-ended)

-

What accessibility supports were most valuable to you? (Open-ended)

8. Open Feedback (10 questions)

-

What was the most valuable part of this training? (Open-ended)

-

What was the least valuable part? (Open-ended)

-

Did the training meet your expectations overall? (Yes/No)

-

How could we improve this training for the future? (Open-ended)

-

Was the timing of the training convenient? (Yes/No)

-

If you could change one thing, what would it be? (Open-ended)

-

Would you like more advanced training on this topic? (Yes/No)

-

Are there other topics you’d like training on? (Open-ended)

-

Any final comments or suggestions? (Open-ended)

-

Overall, how would you rate this training? (Multiple-choice: Excellent → Poor)

Benefits of running training effectiveness surveys

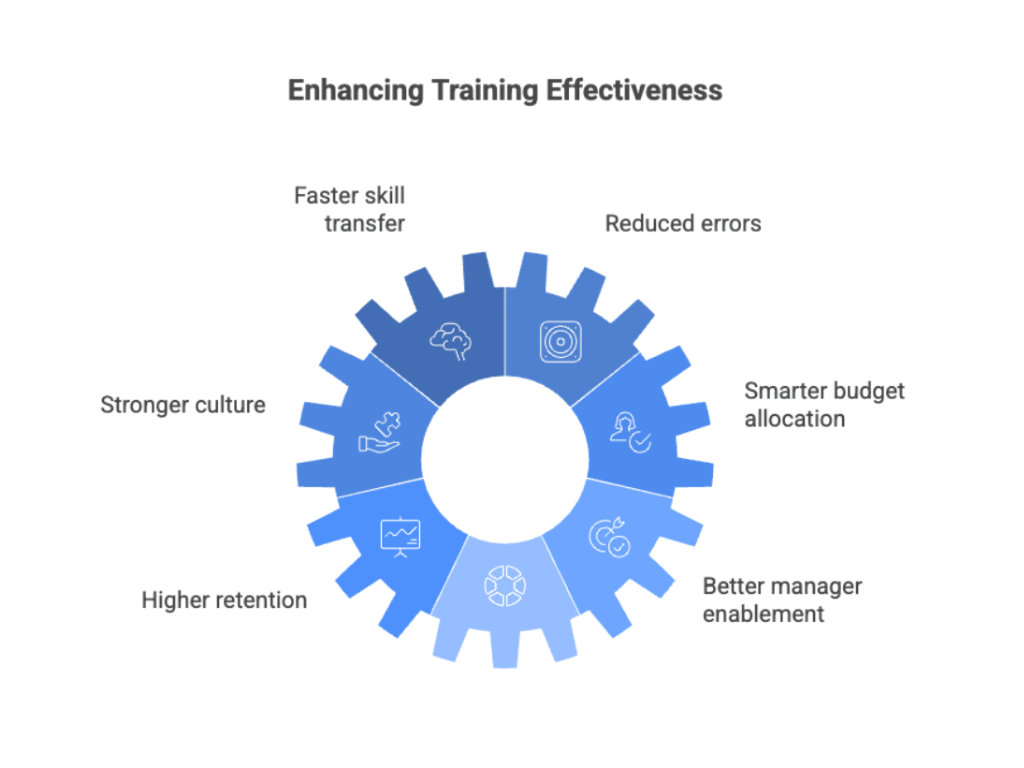

1. Faster skill transfer and measurable performance gains.

The sooner employees can apply what they’ve learned, the sooner the business sees value. Post-training surveys uncover the sticking points, maybe a workflow step wasn’t clear or there wasn’t enough practice time. Fixing those issues means employees use the skill right away rather than weeks later.

For example, adding a checklist or short practice block after a confusing module can cut “time-to-first-use” dramatically, helping productivity gains show up within days instead of months.

2. Reduced errors, rework, and compliance risks

When learners highlight confusing instructions or unrealistic examples, you’re essentially getting an early warning system for mistakes that would otherwise show up in operations. Adjusting the content or adding practical job aids reduces costly rework and ensures employees perform tasks correctly the first time. This doesn’t just save money—it also minimizes safety and compliance risks, which regulators like OSHA stress as part of effective training and evaluation practices.

3. Stronger culture of safety and accountability

Surveys help confirm whether employees actually understand how to respond to real-life scenarios, not just classroom exercises. If gaps appear, training teams can revise modules to better reflect real-world risks. This ongoing loop is crucial for industries where safety and compliance are non-negotiable. It also creates a culture where employees feel the organization is serious about equipping them to do their jobs safely and confidently.

4. Smarter budget allocation (what to scale, what to sunset.

Most organizations spend millions annually on employee learning, but not all programs deliver equal value. By comparing survey responses across different cohorts, formats, or instructors, HR and L&D leaders can clearly see which investments are paying off. High-impact courses can be scaled, while low-performing ones can be improved or retired. This ensures training budgets are directed toward initiatives that actually move the needle for business outcomes.

5. Higher retention by supporting growth.

Employees stay longer when they feel the company is investing in their growth and giving them tools to succeed. Surveys provide tangible evidence that feedback is heard and acted upon, making training more relevant to daily work. Given that replacing one employee can cost about one-third of their annual salary (SHRM), reducing turnover through more effective training has a direct financial payoff while also strengthening morale.

6. Better manager enablement (the transfer multiplier.

A common barrier to applying new skills is lack of support from managers—no time to practice, no system access, or no reinforcement in 1:1s. Surveys can surface these issues quickly, so HR can involve managers in clearing obstacles. This tightens the link between learning and on-the-job coaching, ensuring new skills don’t fade but become part of daily work.

Best practices

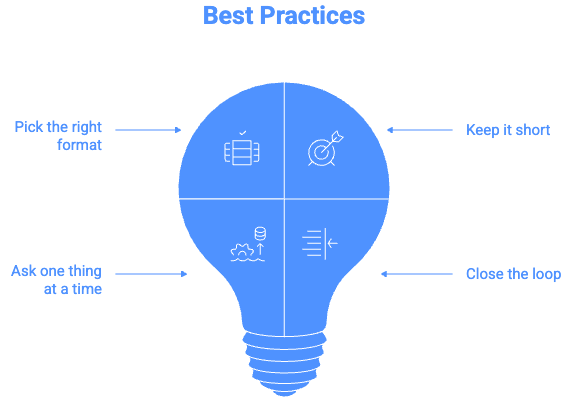

-

Keep it short: Aim for 10–20 questions. Longer surveys turn people off and reduce the quality of responses.

(Pew Research Center) -

Pick the right format: Use agreement scales for opinions, single-choice questions when you want a clear priority, multiple-choice to capture all possible blockers, yes/no for quick checks, and open-ended for detailed feedback.

-

Ask one thing at a time: Avoid combining two ideas in one question. Make sure every question has a clear owner who can act on the feedback (trainer, content designer, or HR admin).

-

Close the loop: Share back within two weeks, what you heard, what you’re changing, and when people will see the updates. This builds trust and shows their input matters.

Designing an effective training survey is one thing—running it smoothly is another. That’s where a platform like Vantage Pulse helps: quick launches, anonymous responses, instant analytics, and clear insights you can share with leadership.

Conclusion

A strong set of training effectiveness survey questions turns learner feedback into next-week decisions. Keep your survey short, choose formats that fit the decisions you need, and include a few items on confidence and intent so you can act fast. Share what’s changing, then compare the next cohorts to confirm impact. Use the 100 questions above to build your survey in minutes, ship visible fixes, and turn feedback into momentum—one course, one improvement, one stronger team at a time.